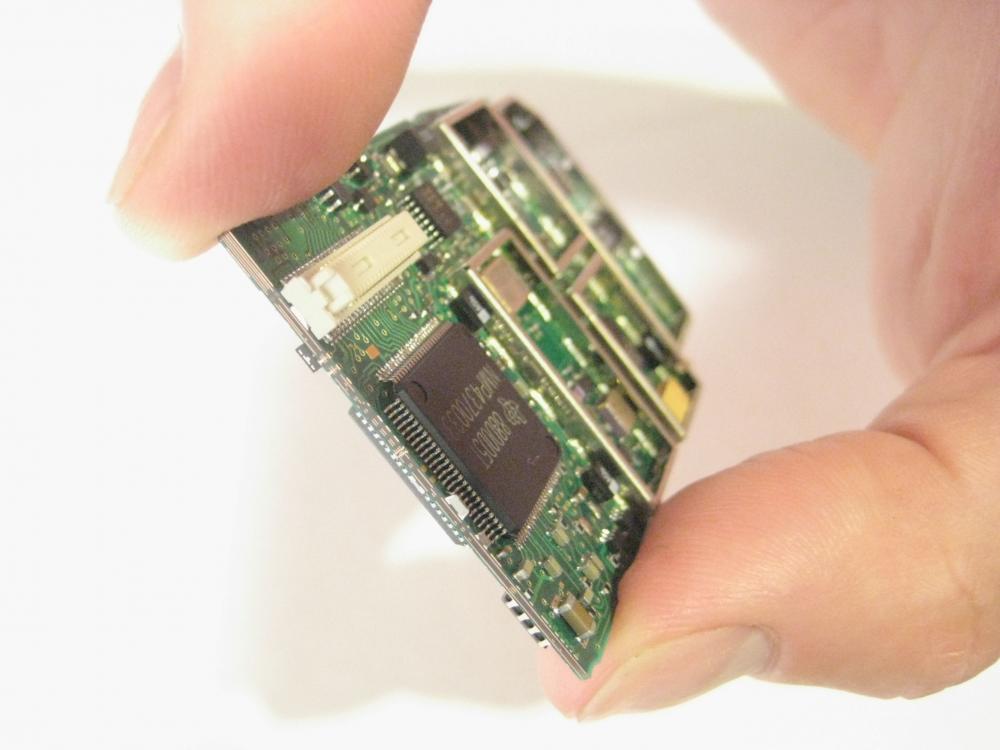

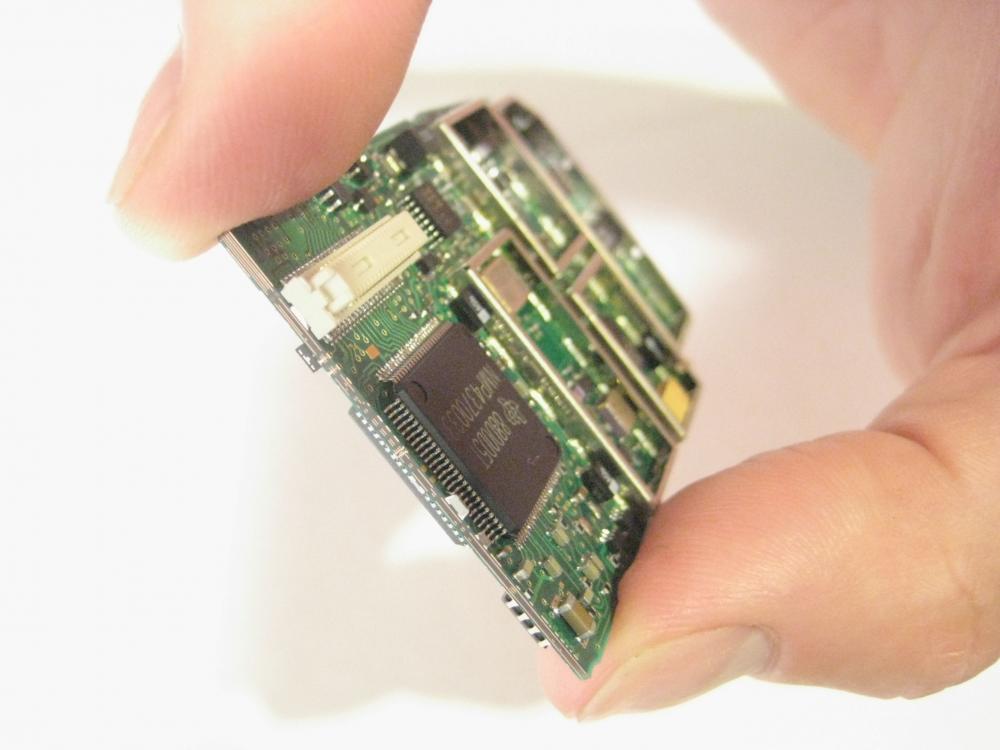

Caption

Artificial intelligence has the opportunity to improve lives, but it could also put Georgians’ jobs and safety at risk.

Artificial intelligence has the opportunity to improve lives, but it could also put Georgians’ jobs and safety at risk.

To build an advanced artificial intelligence, you must first invent the wheel.

AI systems like OpenAI’s ChatGPT are the end result of thousands of years of progress, with each great leap building upon the last so that the pace of advancement increases each year.

There could come a day when someone builds an AI smarter than any human, which can improve its code to make a smarter version, which can improve its code to make a smarter version ad infinitum until the AI far exceeds human understanding.

This concept is known as the technological singularity, a point in time when technological advancement leaves human hands, in the blink of an eye making today’s most sophisticated technology look like a caveman’s sharpened stick.

Georgia lawmakers wrestled with this idea Wednesday at a joint hearing of the Senate Science and Technology and Public Safety committees dedicated to discussing future AI legislation.

“My granddaddy used to have a saying about people that he would deal with,” said Sen. Russ Goodman. “He said, ‘you got to watch out for that fella because you can’t outfigure him.’ We’re dealing with something we can’t outfigure.”

The Cogdell Republican listed several near-term concerns he has with the potential misuse of AI, ranging from deepfaked images being used for blackmail and bribery to terrorist cells using the technology to carry out massive attacks.

“It’s almost like a little bit of the Book of Revelations playing out in front of our eyes when we get to talking about some of this stuff, that’s my biggest concern, how do we stop this from being used for evil?” he said.

AI advancements need to come with ethical rules, said Carrollton Republican Sen. Mike Dugan, but that’s easier said than done.

“Even in this room right here, where I have the highest regard for everybody sitting here, every one of our ethics is going to be different,” he said. “And if we do ethics based off governance, whose governance? It’s not a Georgia thing, it’s not a U.S. thing, it’s not a North American thing, it’s a world thing.”

“Should we not have, as much as we possibly could, safety nets on the front side?” he added.

Rogue AI has been a favorite villain for sci-fi writers for decades, from 2001: A Space Odyssey’s HAL 9000 to Terminator’s Skynet.

“Artificial superintelligence is what Terminator 2 is about,” said Peter Stockburger, autonomous vehicle practice co-lead at Dentons law firm. “The singularity, the final act of where AI is smarter than human beings, can actually rewrite its own code to perform its own tasks in the most efficient way. This is often the fear about a nuclear attack, is that AI figures out that the most efficient path forward is to move forward without human beings.”

Stockburger said he’s more optimistic than Terminator 2: Judgement Day director James Cameron.

“I don’t think AI is gonna end up being this singularity,” he said. “It’s not gonna be a single entity that controls everything. It’s actually gonna be an ecosystem of connected AI, smaller AI’s throughout the entire environment, physical, digital.”

This could present its own challenges for lawmakers, he said.

“The challenge is that we can’t govern AI the same way that we govern humans,” he said. “And so our traditional way of lawmaking, our traditional way of developing regulation does not actually fit this mold. And that’s because machines don’t respond to punishment or the traditional incentives of human beings, and they’re also not bound by our own empathy and our own ethical concerns.”

Dealing with that starts with creating technical standards that guide how AIs act with each other and the physical world. He gave the example of a car wreck signaling all of the other autonomous vehicles nearby to automatically take alternate routes so that firefighters and ambulances could get there more quickly.

States could play a major role by passing laws ensuring that any AI-powered system in that state would be able to communicate with all other AIs using the same language. Laws will also need to be written in a way that machines can understand, he said.

“Those socio-technical controls, they prevent it from outfiguring us,” Stockburger said. “Because if you as the state control the network, if you say AI can’t operate in the state of Georgia unless it’s on this protocol on this network. As the state of Georgia, you could set that low bar very low, and you could say we’re only going to allow AI that’s very simple, not very sophisticated.”

“California may take a different approach, but that allows each state to adopt their own approach, and those two AIs can communicate with each other, be interoperable, which is the key.”

Georgia’s hearing came the day after President Joe Biden signed a sweeping executive order on AI. It creates reporting requirements for AI developers and contains provisions intended to boost privacy, safety and justice concerns.

In a statement, Biden called it “the most significant action any government has ever taken on AI safety, security, and trust.”

Stockburger said it is important to create guardrails around AI, but the executive order is not the end of the story, and governments need to impose technical standards quickly before AI becomes impossible to rein in.

“A lot of the public discussion around the executive order is the devil’s in the details,” he said. “How is that actually going to get implemented? Who’s going to roll that out? Who’s going to adopt that?”

“This traditional idea around lawmaking, really, our position is that it will hit a ceiling,” he added. “It may not be this year, maybe not next year, but it will hit a ceiling because the technology will advance. I personally am a techno-optimist. I think we’re all going to figure it out. I think it’s going to be used primarily for good, so long as there’s controls in place.”

Stockburger also promoted something called the Prometheus Project, “a public private sandbox for you as legislators to test laws around AI in real time in a sandbox to see how those would be machine -readable and executable by AI” built to use proposed standards.

“Imagine as legislators, you can come up with a proposal and say, ‘we’re going to draft a law that says you cannot do X with your AI system,’” he said. “What if you could simulate that a million times in a simulator with real-world digital twins of the city, of the intersection, and you find out, well, those two million simulations that we just ran, 80% of the time there’s an accident, because somebody always turns left unprotected based on the data that our city has on traffic patterns.”

In Greek mythology, Prometheus brought fire to mankind in defiance of the gods. He was punished by being strapped to a stake and having his liver ripped out by an eagle over and over again for years.

Sen. John Albers, chairman of the Public Safety Committee, promised at least one additional hearing before the next legislative session scheduled to start in January.

“I will tell you that it is my belief that in our lifetime, this is going to be the single biggest demarcation of time and innovation that we’re going to see,” he said. “And it is going to change almost everything in our lives now, moving forward, especially for our children, our grandchildren, and beyond.”

In conversation with AI experts, lawmakers on the panel provided some insight into topics they would like to see discussed during the session.

Albers questioned Fred Miskawi, vice president at CGI Technology and the AI Innovation Expert Services in CGI’s AI Enablement Center of Excellence about businesses incorporating AI to prepare budgets and teachers incorporating artificially intelligent tutors for students who may need extra help or who are ready to move to more advanced material faster than their classmates.

“You’ve got a one-to-one tutor for the individual that can take the student to the next layer of capacity, to an AP class level,” he said. “And then because this is used across the classroom, you’re gonna have data, a deeper understanding of where the class is and the type of difficulties that they have.”

“There might be a particular concept that the educator covered, but the way it was covered just clearly did not resonate for the students,” he added. “An AI model can help compensate for that and realize that this is a problem across the class and help not only those bottom four students, but also the entire class.”

Riverdale Democratic Sen. Valencia Seay said her biggest concern is helping seniors, who may be slower to embrace the technology and vulnerable to fraud, which may include scammers using AI to duplicate a loved one’s voice and likeness and using that fake person to ask for money.

Albers advised his colleagues to come up with a safe word for their families to help protect them from such digital doppelgangers.

Sen. Harold Jones, an Augusta Democrat, asked about AI taking over jobs with the example of a solicitor who kept his case count down. An AI could use data collected from that solicitor to help train others.

“You wouldn’t necessarily have to bring me back in as a consultant to talk about how to prioritize cases,” he said. “So I give you all this data, but you’re gonna extrapolate out and know how I would do it to help new solicitors, keep their cases down. Am I gonna be paid for that information?”

Jones also floated the idea of AI courts, presided over by human judges specialized in AI law.

This story comes to GPB through a reporting partnership with Georgia Recorder.