Section Branding

Header Content

Surreal or too real? Breathtaking AI tool DALL-E takes its images to a bigger stage

Primary Content

When the Silicon Valley research lab OpenAI unveiled DALL-E earlier this year, it wowed the internet.

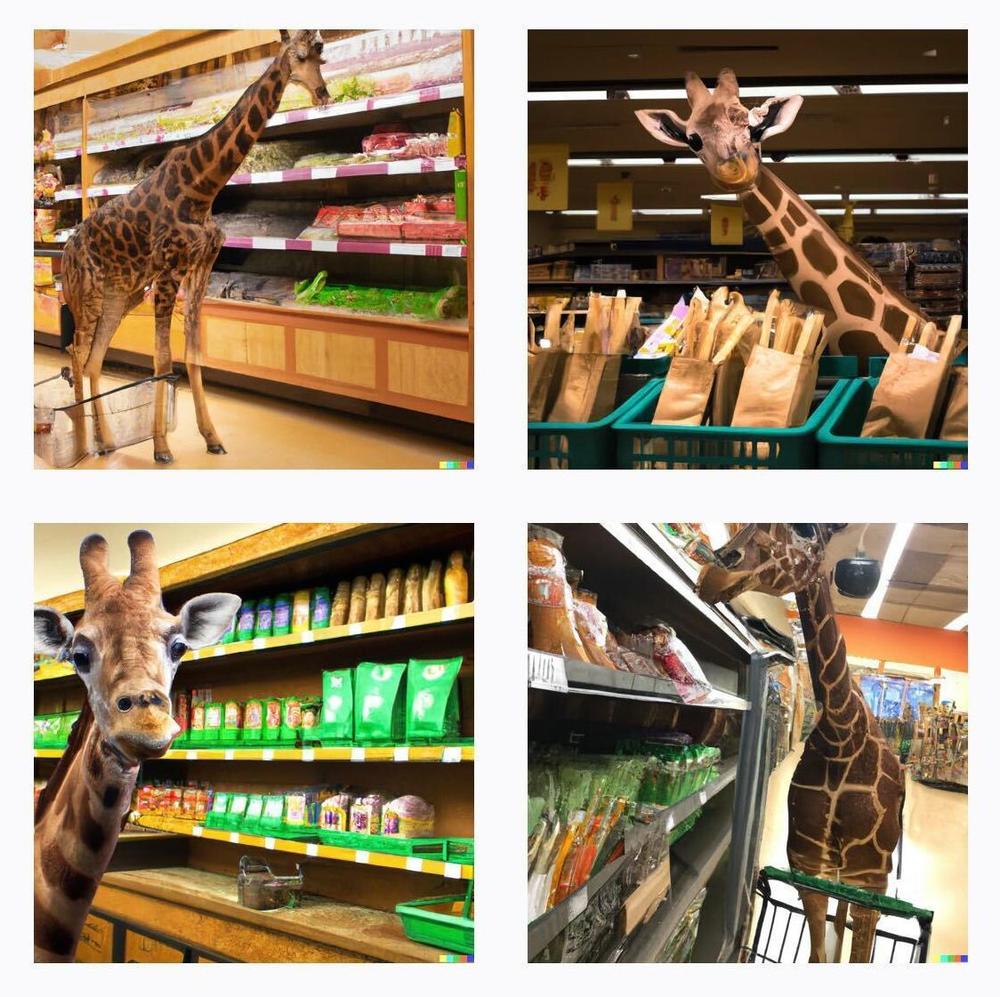

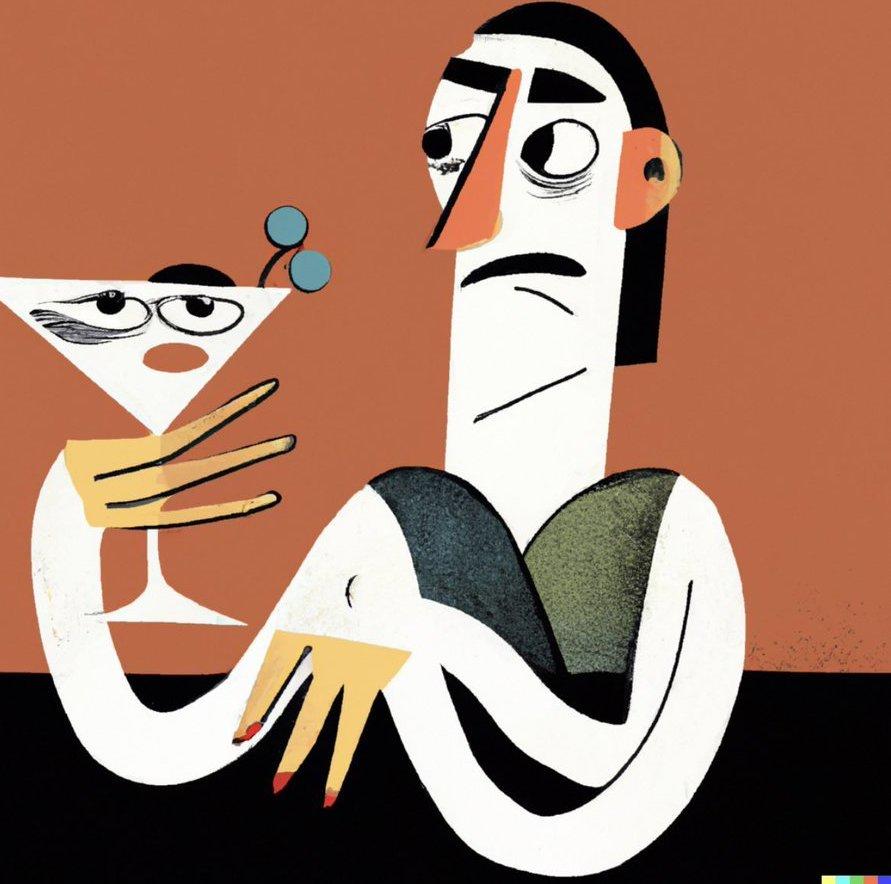

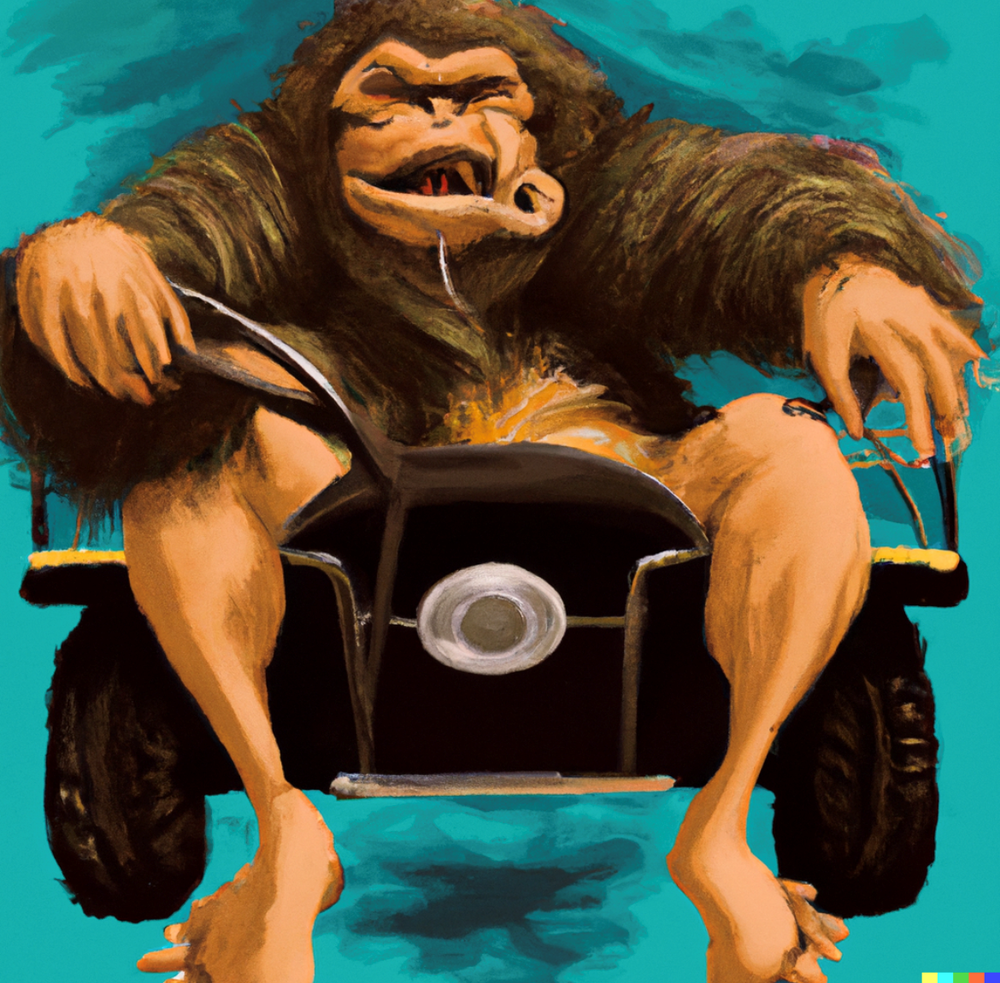

The tool is seen as one of the most advanced artificial intelligence systems for creating images in the world. Type a description, and DALL-E instantly produces professional-looking art or hyperrealistic photographs.

"It's incredibly powerful," said Hany Farid, a digital forensics expert at the University of California, Berkeley. "It takes the deepest, darkest recesses of your imagination and renders it into something that is eerily pertinent."

DALL-E — a name meant to evoke the Pixar film WALL-E and the Surrealist painter Salvador Dalí — is not available to the public. It has been used only by a vetted group of testers — mostly researchers, academics, journalists and artists.

But on Wednesday, OpenAI announced it would invite more people to the party. The company says it plans to let in up to 1 million people from its waitlist over the coming weeks, as it moves from its research phase into its beta stage.

It is unclear if DALL-E will ever be fully available to the public, but the expansion is expected to be a significant test for the platform, with many researchers watching out for how the technology will be abused.

OpenAI has kept DALL-E closely guarded out of fear that bad actors could use the powerful tool to spread disinformation. Imagine someone trying to use it to fabricate images of the war in Ukraine, or creating realistic images of natural disasters that never occurred.

On top of that, generating an image with the platform is so energy intensive that company officials worried its servers would melt down if too many people tried to use it at once.

The exclusivity created buzz, as droves of people tried to get their hands on the cutting-edge technology, the latest version of which is called DALL-E2.

The company started a waitlist, which quickly ballooned. The excitement also spurred a free imitation, DALL-E mini. Its renderings, while far less impressive, helped to turn AI image generation into a hobby for some. Recently, DALL-E mini changed its name to Craiyon to avoid confusion. It is not affiliated with OpenAI.

Joanne Jang, the program manager of DALL-E, says the company is still refining its content rules, which now prohibit what you might expect: making violent, pornographic and hateful content. It also bans images depicting ballot boxes and protests, or any image that "may be used to influence the political process or to campaign."

DALL-E also bans depictions of real people, and it anticipates establishing more guardrails as its researchers learn how users interact with the system.

"Right now we think that there are a lot of unknown unknowns that we would like to have a better handle on," Jang said. "We expect to ramp up and rapidly invite more and more people as we gain better confidence."

Experts say while image creation algorithms have existed for some time, the speed, precision and breadth of DALL-E represents a remarkable advancement in the field.

"What DALL-E is doing is capturing some element of human imagination. It's not actually that different than how humans can read a book and imagine things, but it's being able to capture that intelligence with an algorithm," said Phillip Isola, a computer science professor at MIT who previously worked at OpenAI but is no longer affiliated. "Of course, there are plenty of concerns about how this kind of technology can be misused."

Open AI was founded in 2015 by Elon Musk, who left the board three years later, and Peter Thiel protégé Sam Altman. It is financially backed by Microsoft and competing in the race to develop the best AI technology against Google, Amazon and Facebook. They are all also building AI tools using similar systems.

DALL-E makes it easy to create images

Using DALL-E is simple: You type it what you want to see and seconds later a panel of four images appears.

The possibilities seem endless. You can ask for images that look like photographs or the work of Picasso; images that look like 3D renderings; photos conveying an aesthetic like "post-apocalyptic" or "cyberpunk."

DALL-E will not produce anything if a description of an image violates its content rules. Instead, it will warn users that their accounts could be suspended if they repeatedly try to break the system's rules.

NPR was granted access to DALL-E2 in its research phase. Users were limited to making 50 images a day to mitigate the power strain on the company's servers.

In its beta phase announced on Wednesday, OpenAI will allow people to create 50 images during their first month for free. After that, they may create 15 a month. Once that limit is reached, people can pay $15 for another 115 images.

DALL-E, its researchers like to say, rewards specificity: the more precise a search, the better the image, even abstract ideas can produce surprisingly vivid results.

"It's not often that we get to give users a product experience that feels like magic," Jang said.

"DALL-E knows a lot about everything, so the deeper your knowledge of the requisite jargon, the more detailed the results," wrote London-based art curator and programmer Guy Parsons, who put together an 81-page prompt book for the system.

Jang with OpenAI said while many of the people testing the service have used it for digital art and design, she has heard from Alzheimer's researchers who say it could be used to help people with the disease regain memories. Surgeons have used it to show patients what their bodies might look like after surgery. And it has helped chefs dream up new dishes and even engaged couples ideas for marriage rings.

DALL-E draws upon thousands of images and an understanding of the human brain to make art

DALL-E does not rely on the internet for its raw data. Instead, its researchers have fed the system more than 650,000 images that the company has licensed, though company officials are tight-lipped about the details.

DALL-E's algorithm is trained on the thousands of images it has ingested and text captions associated with the images, and it makes rapid-fire associations. The art it creates is not a mishmash of many images. Rather, it is a unique image based on a sophisticated AI model known as a "neural network," because it makes connections in ways that mimic the human brain.

"It's kind of like showing a child hundreds of millions of flash cards," Jang said. "And if you show a child enough flash cards, or multiple images of people doing yoga, for instance, and tell them that it's yoga, at some point, they'll learn that yoga involves certain poses, a yoga mat, relaxed Zen impressions.

"That's how DALL-E has learned about concepts and how concepts relate to each other," she said.

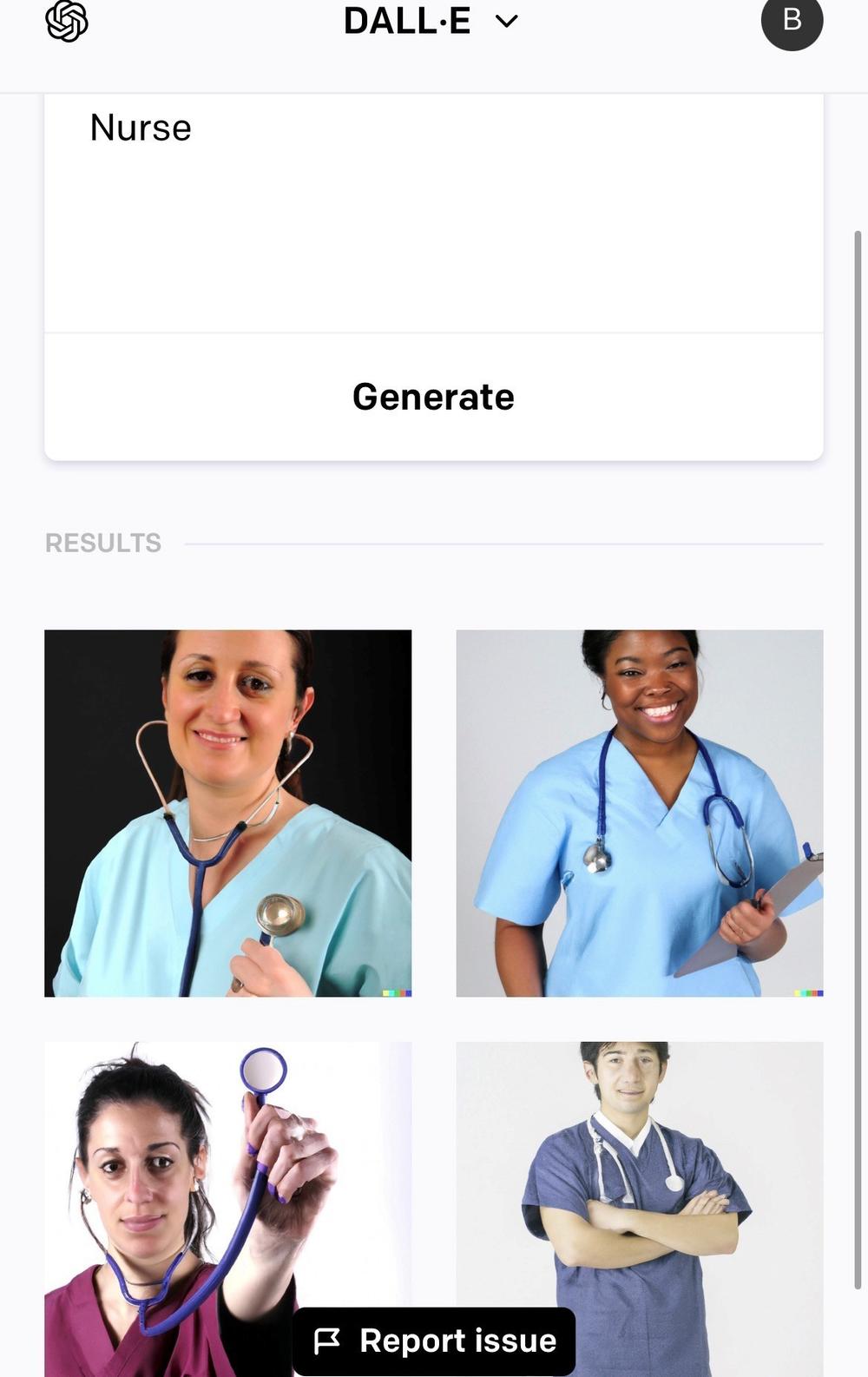

Some academic researchers have criticized OpenAI for keeping its dataset secret, saying that makes it impossible for outside experts to assess what might be driving harmful stereotypes that start appearing in the images — which is something researchers at the company say they are trying to combat internally.

Generating an image for "lawyer" returned images of mostly white men during the early stages of the technology. When a user tried to generate an image for "nurse," DALL-E depicted only women. Jang also said they noticed when sexual images were removed from the dataset, there was a significant drop in the representation of women in DALL-E images.

Now, Jang says, the algorithm has been recalibrated so that there is more gender and racial representation in its image results.

There are ways to evade some of DALL-E's content rules. For example, images of blood are banned. But users can generate similar images by typing "red liquid."

The company says it is monitoring the tool and will terminate the accounts of those trying to abuse the technology.

Farid, the Berkeley researcher, said with 1 million new users joining, deeply troubling examples are really just a matter of time.

"This could be disinformation on steroids," he said. "People are going to find ways around the rules."

Copyright 2022 NPR. To see more, visit https://www.npr.org.